MNIST 28×28 Text→Image (FiLM + CFG)

This repository provides a minimal diffusion model for generating MNIST digits (0–9) from text prompts. It uses a tiny UNet with FiLM conditioning and Classifier-Free Guidance (CFG).

Quickstart (dynamic import)

from huggingface_hub import snapshot_download

import importlib.util, sys, os

local_dir = snapshot_download("starkdv123/mnist-28px-text2img")

pipe_path = os.path.join(local_dir, "pipeline_mnist.py")

spec = importlib.util.spec_from_file_location("pipeline_mnist", pipe_path)

mod = importlib.util.module_from_spec(spec)

sys.modules[spec.name] = mod

spec.loader.exec_module(mod)

Pipe = getattr(mod, "MNISTTextToImagePipeline")

pipe = Pipe.from_pretrained(local_dir)

img = pipe("seven", num_inference_steps=120, guidance_scale=2.5).images[0]

img.save("seven.png")

Alternative (sys.path)

from huggingface_hub import snapshot_download

import sys

local_dir = snapshot_download("starkdv123/mnist-28px-text2img")

sys.path.append(local_dir)

from pipeline_mnist import MNISTTextToImagePipeline as Pipe

pipe = Pipe.from_pretrained(local_dir)

Alternative (Diffusers custom_pipeline)

from diffusers import DiffusionPipeline

pipe = DiffusionPipeline.from_pretrained("starkdv123/mnist-28px-text2img", custom_pipeline="pipeline_mnist")

# Note: requires diffusers>=0.30 and may still be sensitive to custom components.

Training details

- Dataset: MNIST train split

- Image size: 28×28 (grayscale)

- Normalizer:

(x - 0.5) / 0.5→ network predicts noise in[-1,1] - Timesteps: T=500, betas 1e-05→0.02

- UNet base channels: 32; cond dim: 64; time dim: 128

- CFG: drop‑cond p=0.1, null class id=10

- Optimizer: AdamW, lr=0.001, batch=128, epochs=10

Files included

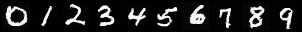

model.safetensors— trained UNet weightsconfig.json— model hyperparametersscheduler_config.json— noise schedulepipeline_mnist.py— custom pipeline definitionmodel_index.json— metadata for Diffuserssamples/grid_0_9.png— final 0–9 gridsamples/grid_e*.png— per‑epoch grids (training progress)

Training progress (epoch grids)

- Downloads last month

- 13