Vulnerable Library - jackson-databind-2.9.9.jar

Vulnerable Library - jackson-databind-2.9.9.jar

General data-binding functionality for Jackson: works on core streaming API

Library home page: http://github.com/FasterXML/jackson

Path to dependency file: /example/quartz-jdbc/pom.xml

Path to vulnerable library: /root/.m2/repository/com/fasterxml/jackson/core/jackson-databind/2.9.9/jackson-databind-2.9.9.jar

Dependency Hierarchy: - spring-boot-starter-web-2.1.6.RELEASE.jar (Root Library) - spring-boot-starter-json-2.1.6.RELEASE.jar - :x: **jackson-databind-2.9.9.jar** (Vulnerable Library)

Vulnerability Details

Vulnerability Details

A Polymorphic Typing issue was discovered in FasterXML jackson-databind 2.x through 2.9.9. When Default Typing is enabled (either globally or for a specific property) for an externally exposed JSON endpoint and the service has JDOM 1.x or 2.x jar in the classpath, an attacker can send a specifically crafted JSON message that allows them to read arbitrary local files on the server.

Publish Date: 2019-06-19

URL: CVE-2019-12814

CVSS 3 Score Details (5.9)

CVSS 3 Score Details (5.9)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: High - Privileges Required: None - User Interaction: None - Scope: Unchanged - Impact Metrics: - Confidentiality Impact: High - Integrity Impact: None - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://github.com/FasterXML/jackson-databind/issues/2341

Release Date: 2019-06-19

Fix Resolution: 2.7.9.6, 2.8.11.4, 2.9.9.1, 2.10.0

Vulnerable Library - jackson-databind-2.9.9.jar

Vulnerable Library - jackson-databind-2.9.9.jar

General data-binding functionality for Jackson: works on core streaming API

Library home page: http://github.com/FasterXML/jackson

Path to dependency file: /example/quartz-jdbc/pom.xml

Path to vulnerable library: /root/.m2/repository/com/fasterxml/jackson/core/jackson-databind/2.9.9/jackson-databind-2.9.9.jar

Dependency Hierarchy: - spring-boot-starter-web-2.1.6.RELEASE.jar (Root Library) - spring-boot-starter-json-2.1.6.RELEASE.jar - :x: **jackson-databind-2.9.9.jar** (Vulnerable Library)

Vulnerability Details

Vulnerability Details

A Polymorphic Typing issue was discovered in FasterXML jackson-databind 2.x through 2.9.9. When Default Typing is enabled (either globally or for a specific property) for an externally exposed JSON endpoint and the service has JDOM 1.x or 2.x jar in the classpath, an attacker can send a specifically crafted JSON message that allows them to read arbitrary local files on the server.

Publish Date: 2019-06-19

URL: CVE-2019-12814

CVSS 3 Score Details (5.9)

CVSS 3 Score Details (5.9)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: High - Privileges Required: None - User Interaction: None - Scope: Unchanged - Impact Metrics: - Confidentiality Impact: High - Integrity Impact: None - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://github.com/FasterXML/jackson-databind/issues/2341

Release Date: 2019-06-19

Fix Resolution: 2.7.9.6, 2.8.11.4, 2.9.9.1, 2.10.0

General data-binding functionality for Jackson: works on core streaming API

Library home page: http://github.com/FasterXML/jackson

Path to dependency file: zaproxy/buildSrc/build.gradle.kts

Path to vulnerable library: /home/wss-scanner/.gradle/caches/modules-2/files-2.1/com.fasterxml.jackson.core/jackson-databind/2.9.2/1d8d8cb7cf26920ba57fb61fa56da88cc123b21f/jackson-databind-2.9.2.jar

Dependency Hierarchy: - github-api-1.95.jar (Root Library) - :x: **jackson-databind-2.9.2.jar** (Vulnerable Library)

Found in HEAD commit: 13d0feb89469fcd0caba70e9b151d20ad1849e95

Found in base branch: develop

Vulnerability Details

Vulnerability Details

A Polymorphic Typing issue was discovered in FasterXML jackson-databind 2.x before 2.9.9. When Default Typing is enabled (either globally or for a specific property) for an externally exposed JSON endpoint, the service has the mysql-connector-java jar (8.0.14 or earlier) in the classpath, and an attacker can host a crafted MySQL server reachable by the victim, an attacker can send a crafted JSON message that allows them to read arbitrary local files on the server. This occurs because of missing com.mysql.cj.jdbc.admin.MiniAdmin validation.

Publish Date: 2019-05-17

URL: CVE-2019-12086

CVSS 3 Score Details (7.5)

CVSS 3 Score Details (7.5)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: None - Scope: Unchanged - Impact Metrics: - Confidentiality Impact: High - Integrity Impact: None - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2019-12086

Release Date: 2019-05-17

Fix Resolution: 2.9.9

Vulnerable Library - jackson-databind-2.9.2.jar

Vulnerable Library - jackson-databind-2.9.2.jar

General data-binding functionality for Jackson: works on core streaming API

Library home page: http://github.com/FasterXML/jackson

Path to dependency file: zaproxy/buildSrc/build.gradle.kts

Path to vulnerable library: /home/wss-scanner/.gradle/caches/modules-2/files-2.1/com.fasterxml.jackson.core/jackson-databind/2.9.2/1d8d8cb7cf26920ba57fb61fa56da88cc123b21f/jackson-databind-2.9.2.jar

Dependency Hierarchy: - github-api-1.95.jar (Root Library) - :x: **jackson-databind-2.9.2.jar** (Vulnerable Library)

Found in HEAD commit: 13d0feb89469fcd0caba70e9b151d20ad1849e95

Found in base branch: develop

Vulnerability Details

Vulnerability Details

A Polymorphic Typing issue was discovered in FasterXML jackson-databind 2.x before 2.9.9. When Default Typing is enabled (either globally or for a specific property) for an externally exposed JSON endpoint, the service has the mysql-connector-java jar (8.0.14 or earlier) in the classpath, and an attacker can host a crafted MySQL server reachable by the victim, an attacker can send a crafted JSON message that allows them to read arbitrary local files on the server. This occurs because of missing com.mysql.cj.jdbc.admin.MiniAdmin validation.

Publish Date: 2019-05-17

URL: CVE-2019-12086

CVSS 3 Score Details (7.5)

CVSS 3 Score Details (7.5)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: None - Scope: Unchanged - Impact Metrics: - Confidentiality Impact: High - Integrity Impact: None - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2019-12086

Release Date: 2019-05-17

Fix Resolution: 2.9.9

Your website URL (or attach your complete example):

You can target our live site, since the issue relates to being unable to enter data in the payment forms: https://www.change.org/Your complete test code (or attach your test files):

```js import { t, Selector } from 'testcafe'; fixture('Join Membership with credit card').page('https://www.change.org/'); test('Guest user clicks contribute directly', async () => { await t .navigateTo('s/member') .expect(Selector('[data-testid=""member_landing_page_inline_contribute_button""]').visible) .ok(); await t .click(Selector('[data-testid=""member_landing_page_inline_contribute_button""]'), { speed: 0.3 }) .expect(Selector('[data-testid=""member_payment_form""]').visible) .ok(); await t .click(Selector('[data-testid=""payment-option-button-creditCard')) .expect(Selector('.iframe-form-element').visible) .ok(); const emailAddressInput = Selector('[data-testid=""input_email""]').filterVisible(); const confirmationEmailInput = Selector('[data-testid=""input_confirmation_email""]').filterVisible(); const firstNameInput = Selector('[data-testid=""input_first_name""]'); const lastNameInput = Selector('[data-testid=""input_last_name""]'); await t .typeText(emailAddressInput, 'email@email.com') .typeText(confirmationEmailInput, 'email@email.com') .typeText(firstNameInput, 'Your') .typeText(lastNameInput, 'Name'); await t .switchToIframe(Selector('[data-testid=""credit-card-number""] iframe')) .typeText(Selector('input[name=""cardnumber""]'), '1234123412341234', { replace: true }) .expect(Selector('input[name=""cardnumber""]').value) .eql('1234 1234 1234 1234') .switchToMainWindow(); }); ```Your complete configuration file (if any):

``` N/A ```Your complete test report:

``` Guest user clicks contribute directly 1) AssertionError: expected '' to deeply equal '1234 1234 1234 1234' Browser: Safari 13.0.5 / macOS 10.15.3 31 | 32 | await t 33 | .switchToIframe(Selector('[data-testid=""credit-card-number""] iframe')) 34 | .typeText(Selector('input[name=""cardnumber""]'), '1234123412341234', { replace: true }) 35 | .expect(Selector('input[name=""cardnumber""]').value) > 36 | .eql('1234 1234 1234 1234') 37 | .switchToMainWindow(); 38 |}); 39 | atScreenshots:

``` N/A ```Your website URL (or attach your complete example):

You can target our live site, since the issue relates to being unable to enter data in the payment forms: https://www.change.org/Your complete test code (or attach your test files):

```js import { t, Selector } from 'testcafe'; fixture('Join Membership with credit card').page('https://www.change.org/'); test('Guest user clicks contribute directly', async () => { await t .navigateTo('s/member') .expect(Selector('[data-testid=""member_landing_page_inline_contribute_button""]').visible) .ok(); await t .click(Selector('[data-testid=""member_landing_page_inline_contribute_button""]'), { speed: 0.3 }) .expect(Selector('[data-testid=""member_payment_form""]').visible) .ok(); await t .click(Selector('[data-testid=""payment-option-button-creditCard')) .expect(Selector('.iframe-form-element').visible) .ok(); const emailAddressInput = Selector('[data-testid=""input_email""]').filterVisible(); const confirmationEmailInput = Selector('[data-testid=""input_confirmation_email""]').filterVisible(); const firstNameInput = Selector('[data-testid=""input_first_name""]'); const lastNameInput = Selector('[data-testid=""input_last_name""]'); await t .typeText(emailAddressInput, 'email@email.com') .typeText(confirmationEmailInput, 'email@email.com') .typeText(firstNameInput, 'Your') .typeText(lastNameInput, 'Name'); await t .switchToIframe(Selector('[data-testid=""credit-card-number""] iframe')) .typeText(Selector('input[name=""cardnumber""]'), '1234123412341234', { replace: true }) .expect(Selector('input[name=""cardnumber""]').value) .eql('1234 1234 1234 1234') .switchToMainWindow(); }); ```Your complete configuration file (if any):

``` N/A ```Your complete test report:

``` Guest user clicks contribute directly 1) AssertionError: expected '' to deeply equal '1234 1234 1234 1234' Browser: Safari 13.0.5 / macOS 10.15.3 31 | 32 | await t 33 | .switchToIframe(Selector('[data-testid=""credit-card-number""] iframe')) 34 | .typeText(Selector('input[name=""cardnumber""]'), '1234123412341234', { replace: true }) 35 | .expect(Selector('input[name=""cardnumber""]').value) > 36 | .eql('1234 1234 1234 1234') 37 | .switchToMainWindow(); 38 |}); 39 | atScreenshots:

``` N/A ```Browser Configuration

- mixed active content blocked: false

- image.mem.shared: true

- buildID: 20190622041859

- tracking content blocked: false

- gfx.webrender.blob-images: true

- hasTouchScreen: true

- mixed passive content blocked: false

- gfx.webrender.enabled: false

- gfx.webrender.all: false

- channel: default

Console Messages:

[u'[JavaScript Warning: ""The resource at https://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js was blocked because content blocking is enabled."" {file: ""https://smallbusiness.chron.com/rename-dual-boot-windows-start-up-63870.html"" line: 0}]', u'[JavaScript Warning: ""The resource at https://cdn.taboola.com/libtrc/hearstlocalnews-chronmobile/loader.js was blocked because content blocking is enabled."" {file: ""https://smallbusiness.chron.com/rename-dual-boot-windows-start-up-63870.html"" line: 0}]', u'[JavaScript Warning: ""The resource at https://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js was blocked because content blocking is enabled."" {file: ""https://smallbusiness.chron.com/rename-dual-boot-windows-start-up-63870.html"" line: 0}]', u'[JavaScript Warning: ""The resource at https://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js was blocked because content blocking is enabled."" {file: ""https://smallbusiness.chron.com/rename-dual-boot-windows-start-up-63870.html"" line: 0}]', u'[JavaScript Warning: ""The resource at https://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js was blocked because content blocking is enabled."" {file: ""https://smallbusiness.chron.com/rename-dual-boot-windows-start-up-63870.html"" line: 0}]', u'[JavaScript Warning: ""The resource at https://nexus.ensighten.com/hearst/news-3p/Bootstrap.js was blocked because content blocking is enabled."" {file: ""https://smallbusiness.chron.com/rename-dual-boot-windows-start-up-63870.html"" line: 0}]', u'[JavaScript Warning: ""Loading failed for the

`);

}

else

res.end();

})

.listen(4100);

```

#### Provide the test code and the tested page URL (if applicable)

Test code

```js

import { Role, ClientFunction, Selector } from 'testcafe';

fixture `Test authentication`

.page `http://localhost:4100/`;

const role = Role(`http://localhost:4100/#login`, async t => await t.click('input'), { preserveUrl: true });

test('first login', async t => {

await t

.wait(3000)

.useRole(role)

.expect(Selector('h1').innerText).eql('Authorized');

});

test('second login', async t => {

await t

.wait(3000)

.useRole(role)

.expect(Selector('h1').innerText).eql('Authorized');

});

```

### Workaround

```js

import { Role, ClientFunction, Selector } from 'testcafe';

fixture `Test authentication`

.page `http://localhost:4100/`;

const role = Role(`http://localhost:4100/#login`, async t => await t.click('input'), { preserveUrl: true });

const reloadPage = new ClientFunction(() => location.reload(true));

const fixedUseRole = async (t, role) => {

await t.useRole(role);

await reloadPage();

};

test('first login', async t => {

await t.wait(3000)

await fixedUseRole(t, role);

await t.expect(Selector('h1').innerText).eql('Authorized');

});

test('second login', async t => {

await t.wait(3000)

await fixedUseRole(t, role);

await t.expect(Selector('h1').innerText).eql('Authorized');

});

```

### Specify your

* testcafe version: 0.19.0",1.0,"Role doesn't work when page navigation doesn't trigger page reloading - ### Are you requesting a feature or reporting a bug?

bug

### What is the current behavior?

Role doesn't work after first-time initialization. `Cookie`, `localStorage` and `sessionStorage` should be restored when a preserved page is loaded but the page changes only the hash and it isn't reloaded after navigating.

### What is the expected behavior?

Page must be reloaded after the `useRole` function call.

### How would you reproduce the current behavior (if this is a bug)?

Node server:

```js

const http = require('http');

http

.createServer((req, res) => {

if (req.url === '/') {

res.writeHead(200, { 'content-type': 'text/html' });

res.end(`

log in

`);

}

else

res.end();

})

.listen(4100);

```

#### Provide the test code and the tested page URL (if applicable)

Test code

```js

import { Role, ClientFunction, Selector } from 'testcafe';

fixture `Test authentication`

.page `http://localhost:4100/`;

const role = Role(`http://localhost:4100/#login`, async t => await t.click('input'), { preserveUrl: true });

test('first login', async t => {

await t

.wait(3000)

.useRole(role)

.expect(Selector('h1').innerText).eql('Authorized');

});

test('second login', async t => {

await t

.wait(3000)

.useRole(role)

.expect(Selector('h1').innerText).eql('Authorized');

});

```

### Workaround

```js

import { Role, ClientFunction, Selector } from 'testcafe';

fixture `Test authentication`

.page `http://localhost:4100/`;

const role = Role(`http://localhost:4100/#login`, async t => await t.click('input'), { preserveUrl: true });

const reloadPage = new ClientFunction(() => location.reload(true));

const fixedUseRole = async (t, role) => {

await t.useRole(role);

await reloadPage();

};

test('first login', async t => {

await t.wait(3000)

await fixedUseRole(t, role);

await t.expect(Selector('h1').innerText).eql('Authorized');

});

test('second login', async t => {

await t.wait(3000)

await fixedUseRole(t, role);

await t.expect(Selector('h1').innerText).eql('Authorized');

});

```

### Specify your

* testcafe version: 0.19.0",1,role doesn t work when page navigation doesn t trigger page reloading are you requesting a feature or reporting a bug bug what is the current behavior role doesn t work after first time initialization cookie localstorage and sessionstorage should be restored when a preserved page is loaded but the page changes only the hash and it isn t reloaded after navigating what is the expected behavior page must be reloaded after the userole function call how would you reproduce the current behavior if this is a bug node server js const http require http http createserver req res if req url res writehead content type text html res end log in var onhashchange function var newhash location hash if newhash if localstorage getitem isloggedin header textcontent authorized header style display block anchor style display none button style display none else header textcontent unauthorized anchor style display block button style display none else if newhash login if localstorage getitem isloggedin return location hash header style display none anchor style display none button style display block button addeventlistener click function localstorage setitem isloggedin true location hash onhashchange window addeventlistener hashchange onhashchange else res end listen provide the test code and the tested page url if applicable test code js import role clientfunction selector from testcafe fixture test authentication page const role role async t await t click input preserveurl true test first login async t await t wait userole role expect selector innertext eql authorized test second login async t await t wait userole role expect selector innertext eql authorized workaround js import role clientfunction selector from testcafe fixture test authentication page const role role async t await t click input preserveurl true const reloadpage new clientfunction location reload true const fixeduserole async t role await t userole role await reloadpage test first login async t await t wait await fixeduserole t role await t expect selector innertext eql authorized test second login async t await t wait await fixeduserole t role await t expect selector innertext eql authorized specify your testcafe version ,1

169943,20841989809.0,IssuesEvent,2022-03-21 02:02:07,michaeldotson/mini-capstone-vue-app,https://api.github.com/repos/michaeldotson/mini-capstone-vue-app,opened,CVE-2022-24772 (High) detected in node-forge-0.7.5.tgz,security vulnerability,"## CVE-2022-24772 - High Severity Vulnerability

Vulnerable Library - node-forge-0.7.5.tgz

Vulnerable Library - node-forge-0.7.5.tgz

JavaScript implementations of network transports, cryptography, ciphers, PKI, message digests, and various utilities.

Library home page: https://registry.npmjs.org/node-forge/-/node-forge-0.7.5.tgz

Path to dependency file: /mini-capstone-vue-app/package.json

Path to vulnerable library: /node_modules/node-forge/package.json

Dependency Hierarchy:

- cli-service-3.5.1.tgz (Root Library)

- webpack-dev-server-3.2.1.tgz

- selfsigned-1.10.4.tgz

- :x: **node-forge-0.7.5.tgz** (Vulnerable Library)

Vulnerability Details

Vulnerability Details

Forge (also called `node-forge`) is a native implementation of Transport Layer Security in JavaScript. Prior to version 1.3.0, RSA PKCS#1 v1.5 signature verification code does not check for tailing garbage bytes after decoding a `DigestInfo` ASN.1 structure. This can allow padding bytes to be removed and garbage data added to forge a signature when a low public exponent is being used. The issue has been addressed in `node-forge` version 1.3.0. There are currently no known workarounds.

Publish Date: 2022-03-18

URL: CVE-2022-24772

CVSS 3 Score Details (7.5)

CVSS 3 Score Details (7.5)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: None

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: None

- Integrity Impact: High

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2022-24772

Release Date: 2022-03-18

Fix Resolution: node-forge - 1.3.0

***

Step up your Open Source Security Game with WhiteSource [here](https://www.whitesourcesoftware.com/full_solution_bolt_github)",True,"CVE-2022-24772 (High) detected in node-forge-0.7.5.tgz - ## CVE-2022-24772 - High Severity Vulnerability

Vulnerable Library - node-forge-0.7.5.tgz

Vulnerable Library - node-forge-0.7.5.tgz

JavaScript implementations of network transports, cryptography, ciphers, PKI, message digests, and various utilities.

Library home page: https://registry.npmjs.org/node-forge/-/node-forge-0.7.5.tgz

Path to dependency file: /mini-capstone-vue-app/package.json

Path to vulnerable library: /node_modules/node-forge/package.json

Dependency Hierarchy:

- cli-service-3.5.1.tgz (Root Library)

- webpack-dev-server-3.2.1.tgz

- selfsigned-1.10.4.tgz

- :x: **node-forge-0.7.5.tgz** (Vulnerable Library)

Vulnerability Details

Vulnerability Details

Forge (also called `node-forge`) is a native implementation of Transport Layer Security in JavaScript. Prior to version 1.3.0, RSA PKCS#1 v1.5 signature verification code does not check for tailing garbage bytes after decoding a `DigestInfo` ASN.1 structure. This can allow padding bytes to be removed and garbage data added to forge a signature when a low public exponent is being used. The issue has been addressed in `node-forge` version 1.3.0. There are currently no known workarounds.

Publish Date: 2022-03-18

URL: CVE-2022-24772

CVSS 3 Score Details (7.5)

CVSS 3 Score Details (7.5)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: None

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: None

- Integrity Impact: High

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2022-24772

Release Date: 2022-03-18

Fix Resolution: node-forge - 1.3.0

***

Step up your Open Source Security Game with WhiteSource [here](https://www.whitesourcesoftware.com/full_solution_bolt_github)",0,cve high detected in node forge tgz cve high severity vulnerability vulnerable library node forge tgz javascript implementations of network transports cryptography ciphers pki message digests and various utilities library home page a href path to dependency file mini capstone vue app package json path to vulnerable library node modules node forge package json dependency hierarchy cli service tgz root library webpack dev server tgz selfsigned tgz x node forge tgz vulnerable library vulnerability details forge also called node forge is a native implementation of transport layer security in javascript prior to version rsa pkcs signature verification code does not check for tailing garbage bytes after decoding a digestinfo asn structure this can allow padding bytes to be removed and garbage data added to forge a signature when a low public exponent is being used the issue has been addressed in node forge version there are currently no known workarounds publish date url a href cvss score details base score metrics exploitability metrics attack vector network attack complexity low privileges required none user interaction none scope unchanged impact metrics confidentiality impact none integrity impact high availability impact none for more information on scores click a href suggested fix type upgrade version origin a href release date fix resolution node forge step up your open source security game with whitesource ,0

1622,10469215037.0,IssuesEvent,2019-09-22 19:08:08,a-t-0/Productivity-phone,https://api.github.com/repos/a-t-0/Productivity-phone,opened,Sync multiple calenders with davdroid at once,Automation,"Currently, setting up the syncing with the (google) calendars is performed poorly automated by emulating human touch programatically on the phone from a pc.

Davdroid has (random) request for donating, which disables the control/click flow, which leads to a async between commands given from pc and input required on phone.

To solve, modify the davdroid so that it just asks the entire groups containing lists of calendar urls at once (copy pastable with single press, or enterable via api), and asking the username and password only once per group (or reading the username from the input).",1.0,"Sync multiple calenders with davdroid at once - Currently, setting up the syncing with the (google) calendars is performed poorly automated by emulating human touch programatically on the phone from a pc.

Davdroid has (random) request for donating, which disables the control/click flow, which leads to a async between commands given from pc and input required on phone.

To solve, modify the davdroid so that it just asks the entire groups containing lists of calendar urls at once (copy pastable with single press, or enterable via api), and asking the username and password only once per group (or reading the username from the input).",1,sync multiple calenders with davdroid at once currently setting up the syncing with the google calendars is performed poorly automated by emulating human touch programatically on the phone from a pc davdroid has random request for donating which disables the control click flow which leads to a async between commands given from pc and input required on phone to solve modify the davdroid so that it just asks the entire groups containing lists of calendar urls at once copy pastable with single press or enterable via api and asking the username and password only once per group or reading the username from the input ,1

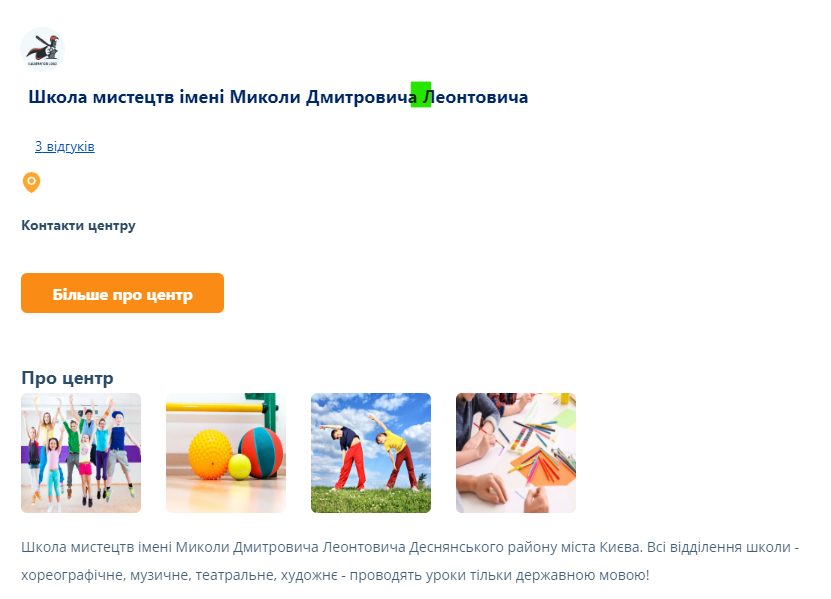

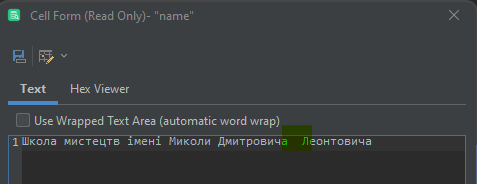

8151,26282565131.0,IssuesEvent,2023-01-07 13:18:52,ita-social-projects/TeachUA,https://api.github.com/repos/ita-social-projects/TeachUA,closed,[Advanced search] Different spelling of center title 'Школа мистецтв імені Миколи Дмитровича Леонтовича',bug Backend Priority: Low Automation,"**Environment:** Windows 11, Google Chrome Version 107.0.5304.107 (Official Build) (64-bit).

**Reproducible:** always.

**Build found:** last commit [7652f37](https://github.com/ita-social-projects/TeachUA/commit/7652f37a2d6de58fe02b06fb38c91acef4b623c7)

**Preconditions**

1. Go to the webpage: https://speak-ukrainian.org.ua/dev/

2. Go to 'Гуртки' tab.

3. Click on 'Розширений пошук' button.

**Steps to reproduce**

1. Click on 'Центр' radio button.

2. Make sure that 'Київ' city is selected (if not, select it).

3. Set 'Район міста' as 'Деснянський'.

4. Click on the center with title 'Школа мистецтв імені Миколи Дмитровича Леонтовича'.

5. Pay attention to the spelling of that title.

6. Go to a database.

7. Execute the following query:

SELECT DISTINCT c.name

FROM centers as c

INNER JOIN locations as l ON c.id=l.center_id

INNER JOIN cities as ct ON l.city_id=ct.id

INNER JOIN districts as ds ON l.district_id=ds.id

WHERE ct.name = 'Київ'

AND ds.name = 'Деснянський';

8. Double-click on the center title 'Школа мистецтв імені Миколи Дмитровича Леонтовича'.

**Actual result**

There are two spaces between the words 'Дмитровича' and 'Леонтовича' on DB.

UI:

DB:

**Expected result**

Center with title 'Школа мистецтв імені Миколи Дмитровича Леонтовича' should have spelled the same on UI and DB (with one space between the words 'Дмитровича' and 'Леонтовича').

**User story and test case links**

User story #274

[Test case](https://jira.softserve.academy/browse/TUA-455)

**Labels to be added**

""Bug"", Priority (""pri: "").

",1.0,"[Advanced search] Different spelling of center title 'Школа мистецтв імені Миколи Дмитровича Леонтовича' - **Environment:** Windows 11, Google Chrome Version 107.0.5304.107 (Official Build) (64-bit).

**Reproducible:** always.

**Build found:** last commit [7652f37](https://github.com/ita-social-projects/TeachUA/commit/7652f37a2d6de58fe02b06fb38c91acef4b623c7)

**Preconditions**

1. Go to the webpage: https://speak-ukrainian.org.ua/dev/

2. Go to 'Гуртки' tab.

3. Click on 'Розширений пошук' button.

**Steps to reproduce**

1. Click on 'Центр' radio button.

2. Make sure that 'Київ' city is selected (if not, select it).

3. Set 'Район міста' as 'Деснянський'.

4. Click on the center with title 'Школа мистецтв імені Миколи Дмитровича Леонтовича'.

5. Pay attention to the spelling of that title.

6. Go to a database.

7. Execute the following query:

SELECT DISTINCT c.name

FROM centers as c

INNER JOIN locations as l ON c.id=l.center_id

INNER JOIN cities as ct ON l.city_id=ct.id

INNER JOIN districts as ds ON l.district_id=ds.id

WHERE ct.name = 'Київ'

AND ds.name = 'Деснянський';

8. Double-click on the center title 'Школа мистецтв імені Миколи Дмитровича Леонтовича'.

**Actual result**

There are two spaces between the words 'Дмитровича' and 'Леонтовича' on DB.

UI:

DB:

**Expected result**

Center with title 'Школа мистецтв імені Миколи Дмитровича Леонтовича' should have spelled the same on UI and DB (with one space between the words 'Дмитровича' and 'Леонтовича').

**User story and test case links**

User story #274

[Test case](https://jira.softserve.academy/browse/TUA-455)

**Labels to be added**

""Bug"", Priority (""pri: "").

",1, different spelling of center title школа мистецтв імені миколи дмитровича леонтовича environment windows google chrome version official build bit reproducible always build found last commit preconditions go to the webpage go to гуртки tab click on розширений пошук button steps to reproduce click on центр radio button make sure that київ city is selected if not select it set район міста as деснянський click on the center with title школа мистецтв імені миколи дмитровича леонтовича pay attention to the spelling of that title go to a database execute the following query select distinct c name from centers as c inner join locations as l on c id l center id inner join cities as ct on l city id ct id inner join districts as ds on l district id ds id where ct name київ and ds name деснянський double click on the center title школа мистецтв імені миколи дмитровича леонтовича actual result there are two spaces between the words дмитровича and леонтовича on db ui db expected result center with title школа мистецтв імені миколи дмитровича леонтовича should have spelled the same on ui and db with one space between the words дмитровича and леонтовича user story and test case links user story labels to be added bug priority pri ,1

1926,11103549389.0,IssuesEvent,2019-12-17 04:21:03,bandprotocol/d3n,https://api.github.com/repos/bandprotocol/d3n,closed,Run EVM bridge automated test for every PR / commit,automation bridge chore,Setup CI to run tests for every push that affects `bridge/evm` directory.,1.0,Run EVM bridge automated test for every PR / commit - Setup CI to run tests for every push that affects `bridge/evm` directory.,1,run evm bridge automated test for every pr commit setup ci to run tests for every push that affects bridge evm directory ,1

214628,16568902759.0,IssuesEvent,2021-05-30 01:43:21,SHOPFIFTEEN/FIFTEEN_FRONT,https://api.github.com/repos/SHOPFIFTEEN/FIFTEEN_FRONT,opened,1-34. 상품관리-등록 관한 Issue,bug documentation,"오류를 재연하기 위해 필요한 조치 (즉, 어떻게 하여 오류를 발견하였나)

관리자 화면에서 상품 등록 시 배송비, 할인율 등 숫자로 입력해야 하는 부분을 글자로 등록 시도

예상했던 동작이나 결과

해당 부분을 어떤 방식으로 수정해야 한다는 경고창 표시

실제 나타난 동작이나 결과

경고창 표시 없이 등록만 불가(어느 부분이 오류인지 확인 불가)

가능한 경우 오류 수정을 위한 제안.

어느 부분이 오류인지 경고창으로 표시할 수 있도록 수정 필요",1.0,"1-34. 상품관리-등록 관한 Issue - 오류를 재연하기 위해 필요한 조치 (즉, 어떻게 하여 오류를 발견하였나)

관리자 화면에서 상품 등록 시 배송비, 할인율 등 숫자로 입력해야 하는 부분을 글자로 등록 시도

예상했던 동작이나 결과

해당 부분을 어떤 방식으로 수정해야 한다는 경고창 표시

실제 나타난 동작이나 결과

경고창 표시 없이 등록만 불가(어느 부분이 오류인지 확인 불가)

가능한 경우 오류 수정을 위한 제안.

어느 부분이 오류인지 경고창으로 표시할 수 있도록 수정 필요",0, 상품관리 등록 관한 issue 오류를 재연하기 위해 필요한 조치 즉 어떻게 하여 오류를 발견하였나 관리자 화면에서 상품 등록 시 배송비 할인율 등 숫자로 입력해야 하는 부분을 글자로 등록 시도 예상했던 동작이나 결과 해당 부분을 어떤 방식으로 수정해야 한다는 경고창 표시 실제 나타난 동작이나 결과 경고창 표시 없이 등록만 불가 어느 부분이 오류인지 확인 불가 가능한 경우 오류 수정을 위한 제안 어느 부분이 오류인지 경고창으로 표시할 수 있도록 수정 필요,0

7469,24946537777.0,IssuesEvent,2022-11-01 01:04:36,dannytsang/homeassistant-config,https://api.github.com/repos/dannytsang/homeassistant-config,opened, Change automations to ⌛timers,automations integration: smartthings,"Similar to #63 but for all other automations that use the ""for"" parameter in automation triggers.

Checklist:

- [ ] Bedroom heated blankets

- [ ] Fans

- [ ] Switches",1.0," Change automations to ⌛timers - Similar to #63 but for all other automations that use the ""for"" parameter in automation triggers.

Checklist:

- [ ] Bedroom heated blankets

- [ ] Fans

- [ ] Switches",1, change automations to ⌛timers similar to but for all other automations that use the for parameter in automation triggers checklist bedroom heated blankets fans switches,1

404,6229997195.0,IssuesEvent,2017-07-11 06:35:23,VP-Technologies/assistant-server,https://api.github.com/repos/VP-Technologies/assistant-server,opened,Implement Creation of Devices DB,automation,"Follow the spec from the doc, and creating a test script with fake devices.",1.0,"Implement Creation of Devices DB - Follow the spec from the doc, and creating a test script with fake devices.",1,implement creation of devices db follow the spec from the doc and creating a test script with fake devices ,1

5978,21781161857.0,IssuesEvent,2022-05-13 19:05:29,dotnet/arcade,https://api.github.com/repos/dotnet/arcade,closed,CG work for dotnet-helix-machines,First Responder Detected By - Automation Helix-Machines Operations,"To drive our CG alert to Zero, please address the following items.

https://dnceng.visualstudio.com/internal/_componentGovernance/dotnet-helix-machines?_a=alerts&typeId=6377838&alerts-view-option=active

We need to address anything with a medium (or higher) priority",1.0,"CG work for dotnet-helix-machines - To drive our CG alert to Zero, please address the following items.

https://dnceng.visualstudio.com/internal/_componentGovernance/dotnet-helix-machines?_a=alerts&typeId=6377838&alerts-view-option=active

We need to address anything with a medium (or higher) priority",1,cg work for dotnet helix machines to drive our cg alert to zero please address the following items we need to address anything with a medium or higher priority,1

4588,16961498009.0,IssuesEvent,2021-06-29 04:59:42,ecotiya/wicum,https://api.github.com/repos/ecotiya/wicum,opened,自動ビルド及び自動テストの導入,automation,"# 【機能要件】

・自動ビルド及び自動テストの導入。

・とりあえずAWSのデプロイまで完了したあとに余裕があったらやる。

# 【タスク】

- [ ] task1

- [ ] task2

- [ ] task3

# 【調査事項】

",1.0,"自動ビルド及び自動テストの導入 - # 【機能要件】

・自動ビルド及び自動テストの導入。

・とりあえずAWSのデプロイまで完了したあとに余裕があったらやる。

# 【タスク】

- [ ] task1

- [ ] task2

- [ ] task3

# 【調査事項】

",1,自動ビルド及び自動テストの導入 【機能要件】 ・自動ビルド及び自動テストの導入。 ・とりあえずawsのデプロイまで完了したあとに余裕があったらやる。 【タスク】 【調査事項】 ,1

2392,11862563563.0,IssuesEvent,2020-03-25 18:09:16,elastic/metricbeat-tests-poc,https://api.github.com/repos/elastic/metricbeat-tests-poc,closed,Validate Helm charts,automation,"Let's use a BDD approach to validate the official Helm charts for elastic. Something like this:

```gherkin

@helm

@k8s

@metricbeat

Feature: The Helm chart is following product recommended configuration for Kubernetes

Scenario: The Metricbeat chart will create recommended K8S resources

Given a cluster is running

When the ""metricbeat"" Elastic's helm chart is installed

Then a pod will be deployed on each node of the cluster by a DaemonSet

And a ""Deployment"" will manage additional pods for metricsets querying internal services

And a ""kube-state-metrics"" chart will retrieve specific Kubernetes metrics

And a ""ConfigMap"" resource contains the ""metricbeat.yml"" content

And a ""ConfigMap"" resource contains the ""kube-state-metrics-metricbeat.yml"" content

And a ""ServiceAccount"" resource manages RBAC

And a ""ClusterRole"" resource manages RBAC

And a ""ClusterRoleBinding"" resource manages RBAC

```",1.0,"Validate Helm charts - Let's use a BDD approach to validate the official Helm charts for elastic. Something like this:

```gherkin

@helm

@k8s

@metricbeat

Feature: The Helm chart is following product recommended configuration for Kubernetes

Scenario: The Metricbeat chart will create recommended K8S resources

Given a cluster is running

When the ""metricbeat"" Elastic's helm chart is installed

Then a pod will be deployed on each node of the cluster by a DaemonSet

And a ""Deployment"" will manage additional pods for metricsets querying internal services

And a ""kube-state-metrics"" chart will retrieve specific Kubernetes metrics

And a ""ConfigMap"" resource contains the ""metricbeat.yml"" content

And a ""ConfigMap"" resource contains the ""kube-state-metrics-metricbeat.yml"" content

And a ""ServiceAccount"" resource manages RBAC

And a ""ClusterRole"" resource manages RBAC

And a ""ClusterRoleBinding"" resource manages RBAC

```",1,validate helm charts let s use a bdd approach to validate the official helm charts for elastic something like this gherkin helm metricbeat feature the helm chart is following product recommended configuration for kubernetes scenario the metricbeat chart will create recommended resources given a cluster is running when the metricbeat elastic s helm chart is installed then a pod will be deployed on each node of the cluster by a daemonset and a deployment will manage additional pods for metricsets querying internal services and a kube state metrics chart will retrieve specific kubernetes metrics and a configmap resource contains the metricbeat yml content and a configmap resource contains the kube state metrics metricbeat yml content and a serviceaccount resource manages rbac and a clusterrole resource manages rbac and a clusterrolebinding resource manages rbac ,1

62496,6798459654.0,IssuesEvent,2017-11-02 05:45:28,minishift/minishift,https://api.github.com/repos/minishift/minishift,closed,make integration failed for cmd-openshift feature,component/integration-test kind/bug priority/major status/needs-info,"```

$ make integration GODOG_OPTS=""-tags cmd-openshift -format pretty""

go install -pkgdir=/home/amit/go/src/github.com/minishift/minishift/out/bindata -ldflags=""-X github.com/minishift/minishift/pkg/version.minishiftVersion=1.5.0 -X github.com/minishift/minishift/pkg/version.b2dIsoVersion=v1.1.0 -X github.com/minishift/minishift/pkg/version.centOsIsoVersion=v1.1.0 -X github.com/minishift/minishift/pkg/version.openshiftVersion=v3.6.0 -X github.com/minishift/minishift/pkg/version.commitSha=0e75c4ec"" ./cmd/minishift

mkdir -p /home/amit/go/src/github.com/minishift/minishift/out/integration-test

go test -timeout 3600s github.com/minishift/minishift/test/integration --tags=integration -v -args --test-dir /home/amit/go/src/github.com/minishift/minishift/out/integration-test --binary /home/amit/go/bin/minishift -tags cmd-openshift -format pretty

Test run using Boot2Docker iso image.

Keeping Minishift cache directory '/home/amit/go/src/github.com/minishift/minishift/out/integration-test/cache' for test run.

Log successfully started, logging into: /home/amit/go/src/github.com/minishift/minishift/out/integration-test/integration.log

Running Integration test in: /home/amit/go/src/github.com/minishift/minishift/out/integration-test

Using binary: /home/amit/go/bin/minishift

Feature: Basic

As a user I can perform basic operations of Minishift and OpenShift

Feature: Openshift commands

Commands ""minishift openshift [sub-command]"" are used for interaction with Openshift

cluster in VM provided by Minishift.

.

.

.

Scenario: Getting existing service without route # features/cmd-openshift.feature:67

When executing ""minishift openshift service nodejs-ex"" succeeds # integration_test.go:652 -> github.com/minishift/minishift/test/integration.executingMinishiftCommandSucceedsOrFails

Then stdout should contain ""nodejs-ex"" # integration_test.go:594 -> github.com/minishift/minishift/test/integration.commandReturnShouldContain

Output did not match. Expected: 'nodejs-ex', Actual: '|-----------|------|----------|-----------|--------|

| NAMESPACE | NAME | NODEPORT | ROUTE-URL | WEIGHT |

|-----------|------|----------|-----------|--------|

|-----------|------|----------|-----------|--------|

'

And stdout should not match

""""""

^http:\/\/nodejs-ex-myproject\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.nip\.io

""""""

Scenario: Getting existing service with route # features/cmd-openshift.feature:100

When executing ""minishift openshift service nodejs-ex"" succeeds # integration_test.go:652 -> github.com/minishift/minishift/test/integration.executingMinishiftCommandSucceedsOrFails

Then stdout should contain ""nodejs-ex"" # integration_test.go:594 -> github.com/minishift/minishift/test/integration.commandReturnShouldContain

Output did not match. Expected: 'nodejs-ex', Actual: '|-----------|------|----------|-----------|--------|

| NAMESPACE | NAME | NODEPORT | ROUTE-URL | WEIGHT |

|-----------|------|----------|-----------|--------|

|-----------|------|----------|-----------|--------|

'

And stdout should match

""""""

http:\/\/nodejs-ex-myproject\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.nip\.io

""""""

.

.

.

--- Failed scenarios:

features/cmd-openshift.feature:39

features/cmd-openshift.feature:69

features/cmd-openshift.feature:93

features/cmd-openshift.feature:102

features/cmd-openshift.feature:110

18 scenarios (13 passed, 5 failed)

48 steps (38 passed, 5 failed, 5 skipped)

2m22.19907923s

testing: warning: no tests to run

PASS

exit status 1

FAIL github.com/minishift/minishift/test/integration 142.214s

make: *** [Makefile:176: integration] Error 1

18:17 $

```",1.0,"make integration failed for cmd-openshift feature - ```

$ make integration GODOG_OPTS=""-tags cmd-openshift -format pretty""

go install -pkgdir=/home/amit/go/src/github.com/minishift/minishift/out/bindata -ldflags=""-X github.com/minishift/minishift/pkg/version.minishiftVersion=1.5.0 -X github.com/minishift/minishift/pkg/version.b2dIsoVersion=v1.1.0 -X github.com/minishift/minishift/pkg/version.centOsIsoVersion=v1.1.0 -X github.com/minishift/minishift/pkg/version.openshiftVersion=v3.6.0 -X github.com/minishift/minishift/pkg/version.commitSha=0e75c4ec"" ./cmd/minishift

mkdir -p /home/amit/go/src/github.com/minishift/minishift/out/integration-test

go test -timeout 3600s github.com/minishift/minishift/test/integration --tags=integration -v -args --test-dir /home/amit/go/src/github.com/minishift/minishift/out/integration-test --binary /home/amit/go/bin/minishift -tags cmd-openshift -format pretty

Test run using Boot2Docker iso image.

Keeping Minishift cache directory '/home/amit/go/src/github.com/minishift/minishift/out/integration-test/cache' for test run.

Log successfully started, logging into: /home/amit/go/src/github.com/minishift/minishift/out/integration-test/integration.log

Running Integration test in: /home/amit/go/src/github.com/minishift/minishift/out/integration-test

Using binary: /home/amit/go/bin/minishift

Feature: Basic

As a user I can perform basic operations of Minishift and OpenShift

Feature: Openshift commands

Commands ""minishift openshift [sub-command]"" are used for interaction with Openshift

cluster in VM provided by Minishift.

.

.

.

Scenario: Getting existing service without route # features/cmd-openshift.feature:67

When executing ""minishift openshift service nodejs-ex"" succeeds # integration_test.go:652 -> github.com/minishift/minishift/test/integration.executingMinishiftCommandSucceedsOrFails

Then stdout should contain ""nodejs-ex"" # integration_test.go:594 -> github.com/minishift/minishift/test/integration.commandReturnShouldContain

Output did not match. Expected: 'nodejs-ex', Actual: '|-----------|------|----------|-----------|--------|

| NAMESPACE | NAME | NODEPORT | ROUTE-URL | WEIGHT |

|-----------|------|----------|-----------|--------|

|-----------|------|----------|-----------|--------|

'

And stdout should not match

""""""

^http:\/\/nodejs-ex-myproject\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.nip\.io

""""""

Scenario: Getting existing service with route # features/cmd-openshift.feature:100

When executing ""minishift openshift service nodejs-ex"" succeeds # integration_test.go:652 -> github.com/minishift/minishift/test/integration.executingMinishiftCommandSucceedsOrFails

Then stdout should contain ""nodejs-ex"" # integration_test.go:594 -> github.com/minishift/minishift/test/integration.commandReturnShouldContain

Output did not match. Expected: 'nodejs-ex', Actual: '|-----------|------|----------|-----------|--------|

| NAMESPACE | NAME | NODEPORT | ROUTE-URL | WEIGHT |

|-----------|------|----------|-----------|--------|

|-----------|------|----------|-----------|--------|

'

And stdout should match

""""""

http:\/\/nodejs-ex-myproject\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.nip\.io

""""""

.

.

.

--- Failed scenarios:

features/cmd-openshift.feature:39

features/cmd-openshift.feature:69

features/cmd-openshift.feature:93

features/cmd-openshift.feature:102

features/cmd-openshift.feature:110

18 scenarios (13 passed, 5 failed)

48 steps (38 passed, 5 failed, 5 skipped)

2m22.19907923s

testing: warning: no tests to run

PASS

exit status 1

FAIL github.com/minishift/minishift/test/integration 142.214s

make: *** [Makefile:176: integration] Error 1

18:17 $

```",0,make integration failed for cmd openshift feature make integration godog opts tags cmd openshift format pretty go install pkgdir home amit go src github com minishift minishift out bindata ldflags x github com minishift minishift pkg version minishiftversion x github com minishift minishift pkg version x github com minishift minishift pkg version centosisoversion x github com minishift minishift pkg version openshiftversion x github com minishift minishift pkg version commitsha cmd minishift mkdir p home amit go src github com minishift minishift out integration test go test timeout github com minishift minishift test integration tags integration v args test dir home amit go src github com minishift minishift out integration test binary home amit go bin minishift tags cmd openshift format pretty test run using iso image keeping minishift cache directory home amit go src github com minishift minishift out integration test cache for test run log successfully started logging into home amit go src github com minishift minishift out integration test integration log running integration test in home amit go src github com minishift minishift out integration test using binary home amit go bin minishift feature basic as a user i can perform basic operations of minishift and openshift feature openshift commands commands minishift openshift are used for interaction with openshift cluster in vm provided by minishift scenario getting existing service without route features cmd openshift feature when executing minishift openshift service nodejs ex succeeds integration test go github com minishift minishift test integration executingminishiftcommandsucceedsorfails then stdout should contain nodejs ex integration test go github com minishift minishift test integration commandreturnshouldcontain output did not match expected nodejs ex actual namespace name nodeport route url weight and stdout should not match http nodejs ex myproject nip io scenario getting existing service with route features cmd openshift feature when executing minishift openshift service nodejs ex succeeds integration test go github com minishift minishift test integration executingminishiftcommandsucceedsorfails then stdout should contain nodejs ex integration test go github com minishift minishift test integration commandreturnshouldcontain output did not match expected nodejs ex actual namespace name nodeport route url weight and stdout should match http nodejs ex myproject nip io failed scenarios features cmd openshift feature features cmd openshift feature features cmd openshift feature features cmd openshift feature features cmd openshift feature scenarios passed failed steps passed failed skipped testing warning no tests to run pass exit status fail github com minishift minishift test integration make error ,0

6040,21940581337.0,IssuesEvent,2022-05-23 17:39:12,pharmaverse/admiral,https://api.github.com/repos/pharmaverse/admiral,closed,Create workflow to automatically create man files,automation,"The workflow should be triggered whenever something is pushed to `devel` or `master`, run `devtools::document()` and commited any updated file in the `man` folder.",1.0,"Create workflow to automatically create man files - The workflow should be triggered whenever something is pushed to `devel` or `master`, run `devtools::document()` and commited any updated file in the `man` folder.",1,create workflow to automatically create man files the workflow should be triggered whenever something is pushed to devel or master run devtools document and commited any updated file in the man folder ,1

137052,11097825807.0,IssuesEvent,2019-12-16 14:07:13,zeebe-io/zeebe,https://api.github.com/repos/zeebe-io/zeebe,closed,LogStreamTest.shouldCloseLogStream unstabled,Status: Needs Review Type: Maintenance Type: Unstable Test,"**Description**

Failed sometimes in the CI.

```

[ERROR] Tests run: 4, Failures: 1, Errors: 0, Skipped: 0, Time elapsed: 2.074 s <<< FAILURE! - in io.zeebe.logstreams.log.LogStreamTest

[ERROR] io.zeebe.logstreams.log.LogStreamTest.shouldCloseLogStream Time elapsed: 0.683 s <<< FAILURE!

java.lang.AssertionError:

Expecting code to raise a throwable.

at io.zeebe.logstreams.log.LogStreamTest.shouldCloseLogStream(LogStreamTest.java:91)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

at org.junit.rules.RunRules.evaluate(RunRules.java:20)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

at org.junit.runners.Suite.runChild(Suite.java:128)

at org.junit.runners.Suite.runChild(Suite.java:27)

at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

at org.apache.maven.surefire.junitcore.JUnitCore.run(JUnitCore.java:55)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.createRequestAndRun(JUnitCoreWrapper.java:137)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.executeLazy(JUnitCoreWrapper.java:119)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.execute(JUnitCoreWrapper.java:87)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.execute(JUnitCoreWrapper.java:75)

at org.apache.maven.surefire.junitcore.JUnitCoreProvider.invoke(JUnitCoreProvider.java:158)

at org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:377)

at org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:138)

at org.apache.maven.surefire.booter.ForkedBooter.run(ForkedBooter.java:465)

at org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:451)

```

Output:

```

12:13:49.119 [] [main] INFO io.zeebe.test - Test finished: shouldCreateNewLogStreamBatchWriter(io.zeebe.logstreams.log.LogStreamTest)

12:13:49.120 [] [main] INFO io.zeebe.test - Test started: shouldCloseLogStream(io.zeebe.logstreams.log.LogStreamTest)

12:13:49.313 [io.zeebe.logstreams.impl.LogStreamBuilder$1] [-zb-actors-3] WARN io.zeebe.logstreams - Unexpected non-empty log failed to read the last block

12:13:49.318 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-3] WARN io.zeebe.logstreams - Unexpected non-empty log failed to read the last block

12:13:49.533 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-1] DEBUG io.zeebe.logstreams - Configured log appender back pressure at partition 0 as AppenderVegasCfg{initialLimit=1024, maxConcurrency=32768, alphaLimit=0.7, betaLimit=0.95}. Window limiting is disabled

12:13:49.600 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-3] INFO io.zeebe.logstreams - Close appender for log stream 0

12:13:49.601 [0-write-buffer] [-zb-actors-3] DEBUG io.zeebe.dispatcher - Dispatcher closed

12:13:49.602 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-1] INFO io.zeebe.logstreams - On closing logstream 0 close 1 readers

12:13:49.603 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-1] INFO io.zeebe.logstreams - Close log storage with name 0

```",1.0,"LogStreamTest.shouldCloseLogStream unstabled - **Description**

Failed sometimes in the CI.

```

[ERROR] Tests run: 4, Failures: 1, Errors: 0, Skipped: 0, Time elapsed: 2.074 s <<< FAILURE! - in io.zeebe.logstreams.log.LogStreamTest

[ERROR] io.zeebe.logstreams.log.LogStreamTest.shouldCloseLogStream Time elapsed: 0.683 s <<< FAILURE!

java.lang.AssertionError:

Expecting code to raise a throwable.

at io.zeebe.logstreams.log.LogStreamTest.shouldCloseLogStream(LogStreamTest.java:91)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

at org.junit.rules.ExternalResource$1.evaluate(ExternalResource.java:48)

at org.junit.rules.RunRules.evaluate(RunRules.java:20)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

at org.junit.runners.Suite.runChild(Suite.java:128)

at org.junit.runners.Suite.runChild(Suite.java:27)

at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

at org.apache.maven.surefire.junitcore.JUnitCore.run(JUnitCore.java:55)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.createRequestAndRun(JUnitCoreWrapper.java:137)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.executeLazy(JUnitCoreWrapper.java:119)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.execute(JUnitCoreWrapper.java:87)

at org.apache.maven.surefire.junitcore.JUnitCoreWrapper.execute(JUnitCoreWrapper.java:75)

at org.apache.maven.surefire.junitcore.JUnitCoreProvider.invoke(JUnitCoreProvider.java:158)

at org.apache.maven.surefire.booter.ForkedBooter.runSuitesInProcess(ForkedBooter.java:377)

at org.apache.maven.surefire.booter.ForkedBooter.execute(ForkedBooter.java:138)

at org.apache.maven.surefire.booter.ForkedBooter.run(ForkedBooter.java:465)

at org.apache.maven.surefire.booter.ForkedBooter.main(ForkedBooter.java:451)

```

Output:

```

12:13:49.119 [] [main] INFO io.zeebe.test - Test finished: shouldCreateNewLogStreamBatchWriter(io.zeebe.logstreams.log.LogStreamTest)

12:13:49.120 [] [main] INFO io.zeebe.test - Test started: shouldCloseLogStream(io.zeebe.logstreams.log.LogStreamTest)

12:13:49.313 [io.zeebe.logstreams.impl.LogStreamBuilder$1] [-zb-actors-3] WARN io.zeebe.logstreams - Unexpected non-empty log failed to read the last block

12:13:49.318 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-3] WARN io.zeebe.logstreams - Unexpected non-empty log failed to read the last block

12:13:49.533 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-1] DEBUG io.zeebe.logstreams - Configured log appender back pressure at partition 0 as AppenderVegasCfg{initialLimit=1024, maxConcurrency=32768, alphaLimit=0.7, betaLimit=0.95}. Window limiting is disabled

12:13:49.600 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-3] INFO io.zeebe.logstreams - Close appender for log stream 0

12:13:49.601 [0-write-buffer] [-zb-actors-3] DEBUG io.zeebe.dispatcher - Dispatcher closed

12:13:49.602 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-1] INFO io.zeebe.logstreams - On closing logstream 0 close 1 readers

12:13:49.603 [io.zeebe.logstreams.impl.log.LogStreamImpl] [-zb-actors-1] INFO io.zeebe.logstreams - Close log storage with name 0

```",0,logstreamtest shouldcloselogstream unstabled description failed sometimes in the ci tests run failures errors skipped time elapsed s failure in io zeebe logstreams log logstreamtest io zeebe logstreams log logstreamtest shouldcloselogstream time elapsed s failure java lang assertionerror expecting code to raise a throwable at io zeebe logstreams log logstreamtest shouldcloselogstream logstreamtest java at java base jdk internal reflect nativemethodaccessorimpl native method at java base jdk internal reflect nativemethodaccessorimpl invoke nativemethodaccessorimpl java at java base jdk internal reflect delegatingmethodaccessorimpl invoke delegatingmethodaccessorimpl java at java base java lang reflect method invoke method java at org junit runners model frameworkmethod runreflectivecall frameworkmethod java at org junit internal runners model reflectivecallable run reflectivecallable java at org junit runners model frameworkmethod invokeexplosively frameworkmethod java at org junit internal runners statements invokemethod evaluate invokemethod java at org junit internal runners statements runbefores evaluate runbefores java at org junit rules externalresource evaluate externalresource java at org junit rules externalresource evaluate externalresource java at org junit rules runrules evaluate runrules java at org junit runners parentrunner runleaf parentrunner java at org junit runners runchild java at org junit runners runchild java at org junit runners parentrunner run parentrunner java at org junit runners parentrunner schedule parentrunner java at org junit runners parentrunner runchildren parentrunner java at org junit runners parentrunner access parentrunner java at org junit runners parentrunner evaluate parentrunner java at org junit runners parentrunner run parentrunner java at org junit runners suite runchild suite java at org junit runners suite runchild suite java at org junit runners parentrunner run parentrunner java at org junit runners parentrunner schedule parentrunner java at org junit runners parentrunner runchildren parentrunner java at org junit runners parentrunner access parentrunner java at org junit runners parentrunner evaluate parentrunner java at org junit runners parentrunner run parentrunner java at org apache maven surefire junitcore junitcore run junitcore java at org apache maven surefire junitcore junitcorewrapper createrequestandrun junitcorewrapper java at org apache maven surefire junitcore junitcorewrapper executelazy junitcorewrapper java at org apache maven surefire junitcore junitcorewrapper execute junitcorewrapper java at org apache maven surefire junitcore junitcorewrapper execute junitcorewrapper java at org apache maven surefire junitcore junitcoreprovider invoke junitcoreprovider java at org apache maven surefire booter forkedbooter runsuitesinprocess forkedbooter java at org apache maven surefire booter forkedbooter execute forkedbooter java at org apache maven surefire booter forkedbooter run forkedbooter java at org apache maven surefire booter forkedbooter main forkedbooter java output info io zeebe test test finished shouldcreatenewlogstreambatchwriter io zeebe logstreams log logstreamtest info io zeebe test test started shouldcloselogstream io zeebe logstreams log logstreamtest warn io zeebe logstreams unexpected non empty log failed to read the last block warn io zeebe logstreams unexpected non empty log failed to read the last block debug io zeebe logstreams configured log appender back pressure at partition as appendervegascfg initiallimit maxconcurrency alphalimit betalimit window limiting is disabled info io zeebe logstreams close appender for log stream debug io zeebe dispatcher dispatcher closed info io zeebe logstreams on closing logstream close readers info io zeebe logstreams close log storage with name ,0

281243,30888436302.0,IssuesEvent,2023-08-04 01:19:37,hshivhare67/kernel_v4.1.15,https://api.github.com/repos/hshivhare67/kernel_v4.1.15,reopened,CVE-2017-12762 (Critical) detected in linuxlinux-4.6,Mend: dependency security vulnerability,"## CVE-2017-12762 - Critical Severity Vulnerability

Vulnerable Library - linuxlinux-4.6

Vulnerable Library - linuxlinux-4.6

The Linux Kernel

Library home page: https://mirrors.edge.kernel.org/pub/linux/kernel/v4.x/?wsslib=linux

Found in base branch: master

Vulnerable Source Files (2)

Vulnerable Source Files (2)

/drivers/isdn/i4l/isdn_common.c

/drivers/isdn/i4l/isdn_common.c

/drivers/isdn/i4l/isdn_common.c

/drivers/isdn/i4l/isdn_common.c

Vulnerability Details

Vulnerability Details

In /drivers/isdn/i4l/isdn_net.c: A user-controlled buffer is copied into a local buffer of constant size using strcpy without a length check which can cause a buffer overflow. This affects the Linux kernel 4.9-stable tree, 4.12-stable tree, 3.18-stable tree, and 4.4-stable tree.

Publish Date: 2017-08-09

URL: CVE-2017-12762

CVSS 3 Score Details (9.8)

CVSS 3 Score Details (9.8)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: None - Scope: Unchanged - Impact Metrics: - Confidentiality Impact: High - Integrity Impact: High - Availability Impact: High

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Release Date: 2017-08-09

Fix Resolution: 3.18.64,v4.13-rc4,4.12.5,4.4.80,4.9.41

Vulnerable Library - linuxlinux-4.6

Vulnerable Library - linuxlinux-4.6

The Linux Kernel

Library home page: https://mirrors.edge.kernel.org/pub/linux/kernel/v4.x/?wsslib=linux

Found in base branch: master

Vulnerable Source Files (2)

Vulnerable Source Files (2)

![]() /drivers/isdn/i4l/isdn_common.c

/drivers/isdn/i4l/isdn_common.c

![]() /drivers/isdn/i4l/isdn_common.c

/drivers/isdn/i4l/isdn_common.c

Vulnerability Details

Vulnerability Details

In /drivers/isdn/i4l/isdn_net.c: A user-controlled buffer is copied into a local buffer of constant size using strcpy without a length check which can cause a buffer overflow. This affects the Linux kernel 4.9-stable tree, 4.12-stable tree, 3.18-stable tree, and 4.4-stable tree.

Publish Date: 2017-08-09

URL: CVE-2017-12762

CVSS 3 Score Details (9.8)

CVSS 3 Score Details (9.8)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: None - Scope: Unchanged - Impact Metrics: - Confidentiality Impact: High - Integrity Impact: High - Availability Impact: High

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Release Date: 2017-08-09

Fix Resolution: 3.18.64,v4.13-rc4,4.12.5,4.4.80,4.9.41

Vulnerable Libraries - bootstrap-3.3.7.tgz, bootstrap-3.1.1.tgz

Vulnerable Libraries - bootstrap-3.3.7.tgz, bootstrap-3.1.1.tgz

bootstrap-3.3.7.tgz

The most popular front-end framework for developing responsive, mobile first projects on the web.

Library home page: https://registry.npmjs.org/bootstrap/-/bootstrap-3.3.7.tgz

Path to dependency file: angular/package.json

Path to vulnerable library: angular/node_modules/bootstrap

Dependency Hierarchy: - angular-benchpress-0.2.2.tgz (Root Library) - :x: **bootstrap-3.3.7.tgz** (Vulnerable Library)

bootstrap-3.1.1.tgz

Sleek, intuitive, and powerful front-end framework for faster and easier web development.

Library home page: https://registry.npmjs.org/bootstrap/-/bootstrap-3.1.1.tgz

Path to dependency file: angular/package.json

Path to vulnerable library: angular/node_modules/bootstrap

Dependency Hierarchy: - :x: **bootstrap-3.1.1.tgz** (Vulnerable Library)

Found in HEAD commit: 72f7fdba608ab5ba7ff145a21673661d5316ebaa

Found in base branch: master

Vulnerability Details

Vulnerability Details

In Bootstrap before 3.4.0, XSS is possible in the affix configuration target property.

Publish Date: 2019-01-09

URL: CVE-2018-20677

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: Required - Scope: Changed - Impact Metrics: - Confidentiality Impact: Low - Integrity Impact: Low - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2018-20677

Release Date: 2019-01-09

Fix Resolution: Bootstrap - v3.4.0;NorDroN.AngularTemplate - 0.1.6;Dynamic.NET.Express.ProjectTemplates - 0.8.0;dotnetng.template - 1.0.0.4;ZNxtApp.Core.Module.Theme - 1.0.9-Beta;JMeter - 5.0.0

Vulnerable Libraries - bootstrap-3.3.7.tgz, bootstrap-3.1.1.tgz

Vulnerable Libraries - bootstrap-3.3.7.tgz, bootstrap-3.1.1.tgz

bootstrap-3.3.7.tgz

The most popular front-end framework for developing responsive, mobile first projects on the web.

Library home page: https://registry.npmjs.org/bootstrap/-/bootstrap-3.3.7.tgz

Path to dependency file: angular/package.json

Path to vulnerable library: angular/node_modules/bootstrap

Dependency Hierarchy: - angular-benchpress-0.2.2.tgz (Root Library) - :x: **bootstrap-3.3.7.tgz** (Vulnerable Library)

bootstrap-3.1.1.tgz

Sleek, intuitive, and powerful front-end framework for faster and easier web development.

Library home page: https://registry.npmjs.org/bootstrap/-/bootstrap-3.1.1.tgz

Path to dependency file: angular/package.json

Path to vulnerable library: angular/node_modules/bootstrap

Dependency Hierarchy: - :x: **bootstrap-3.1.1.tgz** (Vulnerable Library)

Found in HEAD commit: 72f7fdba608ab5ba7ff145a21673661d5316ebaa

Found in base branch: master

Vulnerability Details

Vulnerability Details

In Bootstrap before 3.4.0, XSS is possible in the affix configuration target property.

Publish Date: 2019-01-09

URL: CVE-2018-20677

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: Required - Scope: Changed - Impact Metrics: - Confidentiality Impact: Low - Integrity Impact: Low - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2018-20677

Release Date: 2019-01-09

Fix Resolution: Bootstrap - v3.4.0;NorDroN.AngularTemplate - 0.1.6;Dynamic.NET.Express.ProjectTemplates - 0.8.0;dotnetng.template - 1.0.0.4;ZNxtApp.Core.Module.Theme - 1.0.9-Beta;JMeter - 5.0.0

Vulnerable Library - select2-4.0.0.tgz

Vulnerable Library - select2-4.0.0.tgz

Select2 is a jQuery based replacement for select boxes. It supports searching, remote data sets, and infinite scrolling of results.

Library home page: https://registry.npmjs.org/select2/-/select2-4.0.0.tgz

Path to dependency file: /hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui/src/main/webapp/package.json

Path to vulnerable library: /hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui/src/main/webapp/node_modules/select2/package.json

Dependency Hierarchy: - :x: **select2-4.0.0.tgz** (Vulnerable Library)

Found in base branch: trunk

Vulnerability Details

Vulnerability Details

In Select2 through 4.0.5, as used in Snipe-IT and other products, rich selectlists allow XSS. This affects use cases with Ajax remote data loading when HTML templates are used to display listbox data.

Publish Date: 2019-03-27

URL: CVE-2016-10744

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: Required - Scope: Changed - Impact Metrics: - Confidentiality Impact: Low - Integrity Impact: Low - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2016-10744

Release Date: 2019-03-27

Fix Resolution: 4.0.8

Vulnerable Library - select2-4.0.0.tgz

Vulnerable Library - select2-4.0.0.tgz

Select2 is a jQuery based replacement for select boxes. It supports searching, remote data sets, and infinite scrolling of results.

Library home page: https://registry.npmjs.org/select2/-/select2-4.0.0.tgz

Path to dependency file: /hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui/src/main/webapp/package.json

Path to vulnerable library: /hadoop-yarn-project/hadoop-yarn/hadoop-yarn-ui/src/main/webapp/node_modules/select2/package.json

Dependency Hierarchy: - :x: **select2-4.0.0.tgz** (Vulnerable Library)

Found in base branch: trunk

Vulnerability Details

Vulnerability Details

In Select2 through 4.0.5, as used in Snipe-IT and other products, rich selectlists allow XSS. This affects use cases with Ajax remote data loading when HTML templates are used to display listbox data.

Publish Date: 2019-03-27

URL: CVE-2016-10744

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics: - Exploitability Metrics: - Attack Vector: Network - Attack Complexity: Low - Privileges Required: None - User Interaction: Required - Scope: Changed - Impact Metrics: - Confidentiality Impact: Low - Integrity Impact: Low - Availability Impact: None

For more information on CVSS3 Scores, click here. Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2016-10744

Release Date: 2019-03-27

Fix Resolution: 4.0.8

Vulnerable Libraries - json5-1.0.1.tgz, json5-2.2.0.tgz, json5-0.5.1.tgz

Vulnerable Libraries - json5-1.0.1.tgz, json5-2.2.0.tgz, json5-0.5.1.tgz

json5-1.0.1.tgz

JSON for humans.

Library home page: https://registry.npmjs.org/json5/-/json5-1.0.1.tgz

Path to dependency file: /console2/package.json

Path to vulnerable library: /console2/node_modules/html-webpack-plugin/node_modules/json5/package.json,/console2/node_modules/tsconfig-paths/node_modules/json5/package.json,/console2/node_modules/resolve-url-loader/node_modules/json5/package.json,/console2/node_modules/postcss-loader/node_modules/json5/package.json,/console2/node_modules/babel-loader/node_modules/json5/package.json,/console2/node_modules/mini-css-extract-plugin/node_modules/json5/package.json,/console2/node_modules/webpack/node_modules/json5/package.json

Dependency Hierarchy: - react-scripts-4.0.3.tgz (Root Library) - babel-loader-8.1.0.tgz - loader-utils-1.4.0.tgz - :x: **json5-1.0.1.tgz** (Vulnerable Library)

json5-2.2.0.tgz

JSON for humans.

Library home page: https://registry.npmjs.org/json5/-/json5-2.2.0.tgz

Path to dependency file: /console2/package.json

Path to vulnerable library: /console2/node_modules/json5/package.json

Dependency Hierarchy: - react-scripts-4.0.3.tgz (Root Library) - core-7.12.3.tgz - :x: **json5-2.2.0.tgz** (Vulnerable Library)

json5-0.5.1.tgz

JSON for the ES5 era.

Library home page: https://registry.npmjs.org/json5/-/json5-0.5.1.tgz

Path to dependency file: /console2/package.json

Path to vulnerable library: /console2/node_modules/babel-register/node_modules/json5/package.json,/console2/node_modules/babel-cli/node_modules/json5/package.json

Dependency Hierarchy: - babel-cli-6.26.0.tgz (Root Library) - babel-core-6.26.3.tgz - :x: **json5-0.5.1.tgz** (Vulnerable Library)

Found in HEAD commit: b9420f3b9e73a9d381266ece72f7afb756f35a76

Found in base branch: master

Vulnerability Details

Vulnerability Details